Abstract

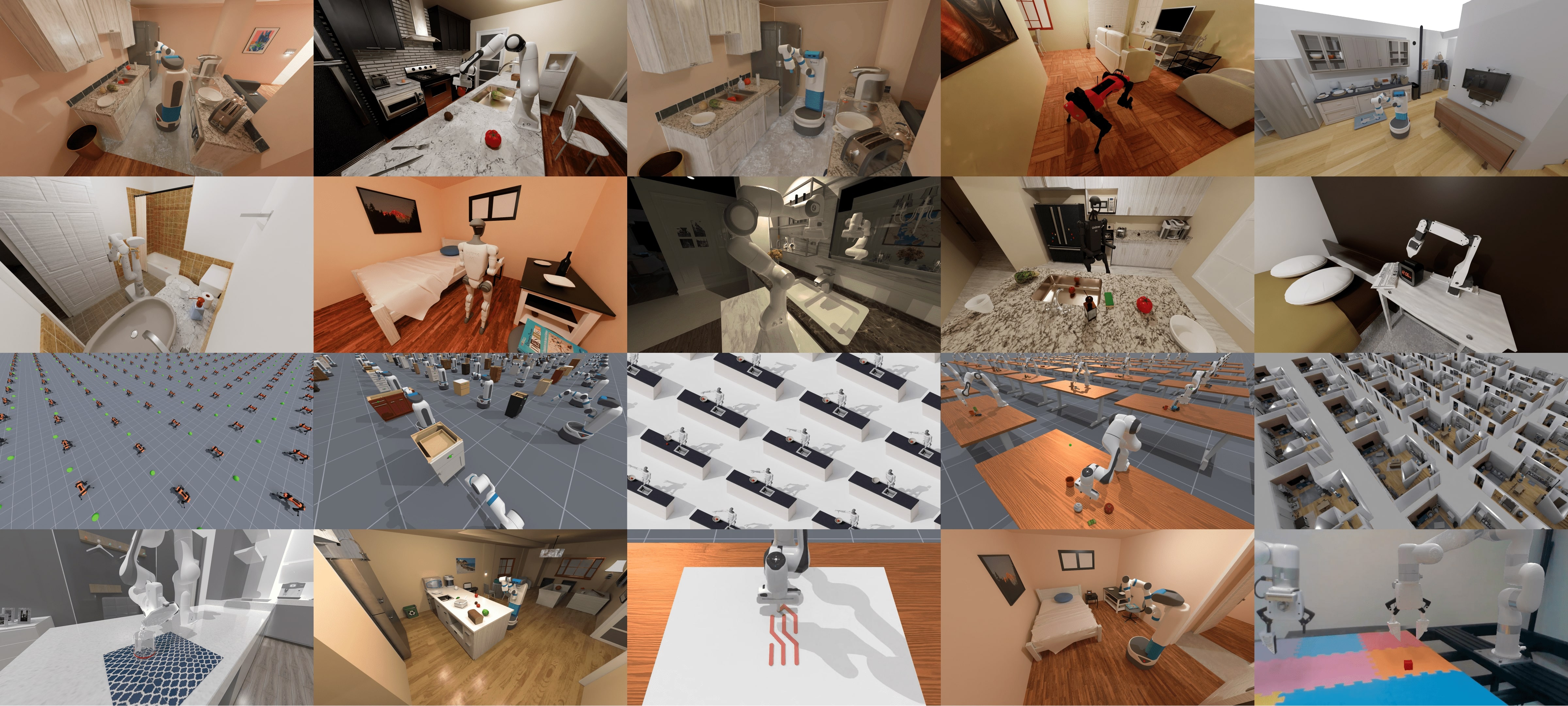

Simulation has enabled unprecedented compute-scalable approaches to robot learning. However, many existing simulation frameworks typically support a narrow range of scenes/tasks and lack features critical for scaling generalizable robotics and sim2real. We introduce and open source ManiSkill3, the fastest state-visual GPU parallelized robotics simulator with contact-rich physics targeting generalizable embodied AI. ManiSkill3 supports GPU parallelization of many aspects including simulation+rendering, heterogeneous simulation, pointclouds/voxels visual input, and more. Simulation with rendering on ManiSkill3 can run 10-1000x faster with 2-3x less GPU memory usage than other platforms, achieving up to 30,000+ FPS in benchmarked environmentl. Tasks that used to take hours to train can now take minutes. To further support scale, we provide the largest dataset of interactable scenes in one simulator, with demonstrations to support and investigate scaling reinforcement/imitation learning.

Speaker

Stone Tao is a PhD student at UC San Diego, advised by professor Hao Su. His current research interests revolve around a general goal of building efficient, adaptable, and capable embodied AI. A significant portion of his work focuses on scalable datasets and benchmarks for embodied AI, tackling the intersection of simulation and machine learning. To this end, his research encompasses reinforcement learning reinforcement learning, simulation few-shot learning, imitation learning, auto curriculum learning, as well as high-quality robotics and game benchmarks.

Video

Extra Details

Speaker Website / Paper Link / Paper Code/ Paper Project Page